Book Club: 1970, the first great British polling disaster

An extract from Mark Pack’s Polling UnPacked: The History, Uses and Abuses of Political Opinion Polls.

British politics has seen plenty of debate recently - tinged with hope in Tory circles, tinged with fear everywhere else – regarding the question of whether the polls could be wrong. Such discussion tends to concern either the 2016 referendum (when the result wasn’t actually outside the margin of error) or the 1992 general election (when people tend to misremember the polls).

But this month’s book extract, from Mark Pack’s 2022 book Polling UnPacked: The History, Uses and Abuses of Political Opinion Polls, is a reminder that Britain’s polling industry experienced a bigger and more consequential failure long before that. Take it away, Mark…

After three Conservative victories in a row in Britain in the 1951, 1955 and 1959 general elections, there had started to be doubts about whether the Labour Party could ever win again. An influential book was even published in 1960, based on detailed survey work (albeit using a small sample), titled Must Labour Lose?, by Mark Abrams, Richard Rose and Rita Hinden.

Then under Harold Wilson, the Labour Party seemed to have successfully positioned itself as the party for the future, with the ruling Conservatives stuck in the past and mired in scandal. Wilson became prime minister in 1964 following a wafer-thin victory, turning that into a solid majority at the 1966 election. Ahead of the 1970 general election, he and Labour looked to be headed towards a comfortable re-election. The polls said so.

What’s more, the polls had done well in both of Wilson’s previous victories. In 1964, Gallup, NOP and Research Services Limited all rightly gave Labour a small lead in their final polls, with only the Daily Express poll putting the Conservatives slightly ahead. Even that was not such a bad miss, putting the Conservatives 1% ahead, rather than 2% behind. Then, in 1966, all of the pollsters had rightly put Wilson’s Labour well ahead.

For 1970, the polls looked promising for Labour once more. Four of the five pollsters – Gallup, Harris, Marplan and NOP – had Labour ahead in their final polls with leads of between two and ten points over the Conservatives. Only ORC had the Conservatives ahead, by just one point. Yet ORC’s two previous polls had put Labour ahead, as had, in fact, sixteen out of the last seventeen polls (and 25 of the last 27) conducted before polling day. With that backdrop, and with what other pollsters were saying, the final ORC poll putting the Conservatives ahead looked a rogue. Even the Evening Standard, which had commissioned the ORC poll, did not fully back its own polling, caveating its coverage on 18 June 1970 with, “This is one election in which the polls could conceivably get the result wrong, as happened in the Truman–Dewey fight in the USA in 1948.” As it presciently warned, “The one thing which opinion polls do not like is a close finish.”

Then voters voted, and the Conservatives won by two points. Harold Wilson was out of office, and the Conservatives back in power.

It was a dramatic and high-profile failure of polling – Britain’s answer to 1948. It was made worse by 1970 being the first election campaign in which the British newspapers who commissioned polls did not assert copyright over their polls in order to stop other newspapers reporting the findings. No more holding off polling figures until later editions of a newspaper to stop rivals being able to cover them too, and no more letters threatening action for breach of copyright to others for republishing the numbers. Instead, in 1970 each paper’s polls also got coverage elsewhere, and polling coverage dominated media coverage of the campaign, including 8 of the 23 front pages for the Times in the run-up to polling day. This made the collective failure of the polls all the more apparent.

What went wrong? The usually authoritative Nuffield study concluded,

The most plausible explanation for the 1970 failure of the polls (which is also the most convenient for the pollsters) must lie in a late swing back to the Conservatives. If we take the middle of the interviewing period for each of the final polls we get this picture [for the Labour lead] . . .

It gave the following figures to back up that conclusion, with the dates being the middle date of the fieldwork period for each of the polls:

12 June: 9.6% – Marplan

15 June: 7% – Gallup

15 June: 4.1% – NOP

15 June: 2% – Harris

17 June: –1% – ORC

18 June: –2.4% – Actual result

That looks like a clear trend away from Labour, one that seems plausible given what happened late in the campaign. There was a supposed change in national mood caused by a combination of England being knocked out at the quarter-finals stage of the FIFA World Cup on the Sunday before polling day (14 June), followed by a break in the lovely summer weather on the Monday. There was more substantive bad news for the government too, with a surprise set of grim balance-of-payments figures also published that Monday.

There are, however, other ways of presenting that data. If you instead sort the table not by the middle day of fieldwork for each poll but by the final day of fieldwork - and when that is the same, putting the poll that started on an earlier date first - the list becomes:

14 June: 9.6% – Marplan

16 June: 2% – Harris

16 June: 4.1% – NOP

16 June: 7% – Gallup

17 June: –1% – ORC

18 June: –2.4% – Actual result

No more neat trend.

The Nuffield study, to be fair, also offered other evidence in favour of the late-swing theory, citing polling carried out shortly after the general election: “On the weekend of June 20, 6% of those who told ORC interviewers that they voted Conservative said that they had intended to vote Labour at the beginning of the campaign. Only 1% of Labour voters said they had switched from Conservative.”

Yet this implies a net rise of only around two percentage points in the Conservative vote share across the campaign from this switching. In fact, ORC had already shown a five-percentage-point rise in Conservative support during the campaign in its polls. Those post-election re-interviews therefore only help explain the movement already captured in the ORC polls, and not the further gap between its final poll and reality. All of that is without getting into the traditional problem that polls taken just after an election often show a victor’s bonus – that is, more people saying they had voted for the winner than actually had.

Similar doubts apply to the other polling evidence for a late swing cited in the study: “Gallup, going back to 700 of its final sample, found something like a 3% net swing to Conservative amongst those who did not vote as they had said they would.” Note that, at least if the reports were accurate, that was only a 3% swing among those who had changed their minds during the election, not 3% overall: again, a small effect. Moreover, the late re-interviewing from Harris just before polling day showed an increase, not a decrease, in Labour’s lead compared with the earlier Harris fieldwork.

Stronger, but still limited, evidence comes from two other pollsters:

Marplan, in a similar exercise with 664 of its final sample, found 4% switching to Conservative from Labour and between 1% and 2% from Liberal; the reverse movements were much smaller. (However, even with allowance for such late switches, the Marplan figures would still have left Labour in the lead.) NOP in the most elaborate of the re-interviewing exercises found a net gain in the lead to the Conservatives of 4.3%.

So, the ORC, Gallup and Marplan figures are too small to explain away the pollsters’ blushes, and Harris’s figures showed movement in the wrong direction. Only NOP’s figures venture into the right territory by being noticeably larger, but they are the outlier – and still susceptible to that victor’s-bonus problem.

Moreover, other evidence cited by the Nuffield study shows that an increase in the Conservative vote had already been priced into some of the final polls, with pollsters picking up in their campaign polling a rise in Conservative support among people they spoke to compared with earlier in the campaign. All this tentative evidence at least consistently points towards the Conservatives having had a good campaign overall, with rising support, notwithstanding the fact that some of that rise was already captured in the final polls and so cannot explain the polling error.

So what does explain it? Our knowledge is hindered by how much more secretive public polling was back in 1970. Partly this was a matter of practicality (there were no websites on which polling companies could post their data tables); partly this was a matter of the media and the limited details given in poll reports. The year 1970 did see some moves towards responsible self-regulation by pollsters, with a four-point code of practice introduced. Nevertheless, reports were often sparse on details that we are now used to knowing and which are crucial to polling post-mortems, such as the exact dates on which fieldwork took place.

What we do know is that the closest poll – and the one that symbolically had the Conservatives ahead – used polling up until the eve of the election, saw a trend in their favour and projected that trend further in its final calculations. That ORC poll also made an adjustment for differential turnout – that is, a greater willingness on the part of the supporters of one party to actually go and vote than on the part of supporters of another party. If on polling day morning a higher proportion of Conservatives woke up and decided to vote than Labour supporters did on waking up, then that would have increased the Conservative vote share. Differential turnout is also attractive as a possible explanation, as turnout in 1970 was then the lowest in a general election since the Second World War, and turnout did not fall as low again until the 1997 election: that gives space for a decline in Labour turnout boosting the Conservative vote share.

However, pollsters had not found a differential-turnout problem when studying previous parliamentary elections, and when other pollsters tried adjusting for differential turnout at later general elections, this had very mixed results on polling accuracy. Moreover, ORC was not the only pollster to make differential-turnout adjustments at the 1970 election. So differential turnout is not the magic explanation either – and anyway, for all the things that ORC did, its final poll was also still short of the result.

This leaves the exact cause of the polling miss a mystery, or – more likely, in Swiss cheese fashion, due to a combination of factors, each too small to leave that much evidence behind on its own. What is more, it is likely that what went wrong was not a simple list of causes that were common across the polling sector, as the pollsters had been painting different pictures of what was going on during the campaign.

Graphing their polls carried out during the campaign gives a messy pattern: “[It was] a notably wild month when NOP’s trend-lines crossed Marplan’s, ORC’s crossed Harris’s, and Gallup trends crossed themselves.” Different pollsters were wrong in different ways, it appears.

The example of 1970 shows that there are often no easy explanations when the polls go wrong. The apparent easy explanations (such as late swing) are themselves frequently also wrong in whole or in part. Even detailed post-mortems often struggle to come up with the factor or factors, instead identifying a range of things that went wrong to differing degrees and which probably between them explain what happened.

Explaining what happens when polling goes wrong, therefore, is more like investigating someone’s overall health than it is like investigating a murder. With murder, you can hope to reach one clear explanation. With overall health, the more thorough the investigations, the more things you will find that are not quite perfect. You can find major and minor problems, and perhaps rule out some factors, but you will be left with a mixed picture, not a simple answer.

One piece of clarity did come from the polling failures: the late movements in support towards the Conservatives, although not wholly responsible for the polling errors, did at least show up the risks of finishing fieldwork well in advance of polling day. That was an easy lesson for pollsters to learn and to apply to future British elections. Fieldwork moved up to be closer to polling day itself.

As a footnote to the events of 1970, the headlines also said that the pollsters got it wrong at the next general election, held in February 1974. Those headlines were wrong: the polls were pretty accurate, much improved on 1970, and correctly put the Conservatives ahead on votes. The voting system, however, gave Labour the most seats and therefore the label of winner. It was the voting system that got the election wrong, not the pollsters.

Dr Mark Pack is, among other things, the president of the Liberal Democrats. Polling UnPacked: The History, Uses and Abuses of Political Opinion Polls was published by Reaktion Books in 2022. You can get 20% off a copy here using the code polling24.

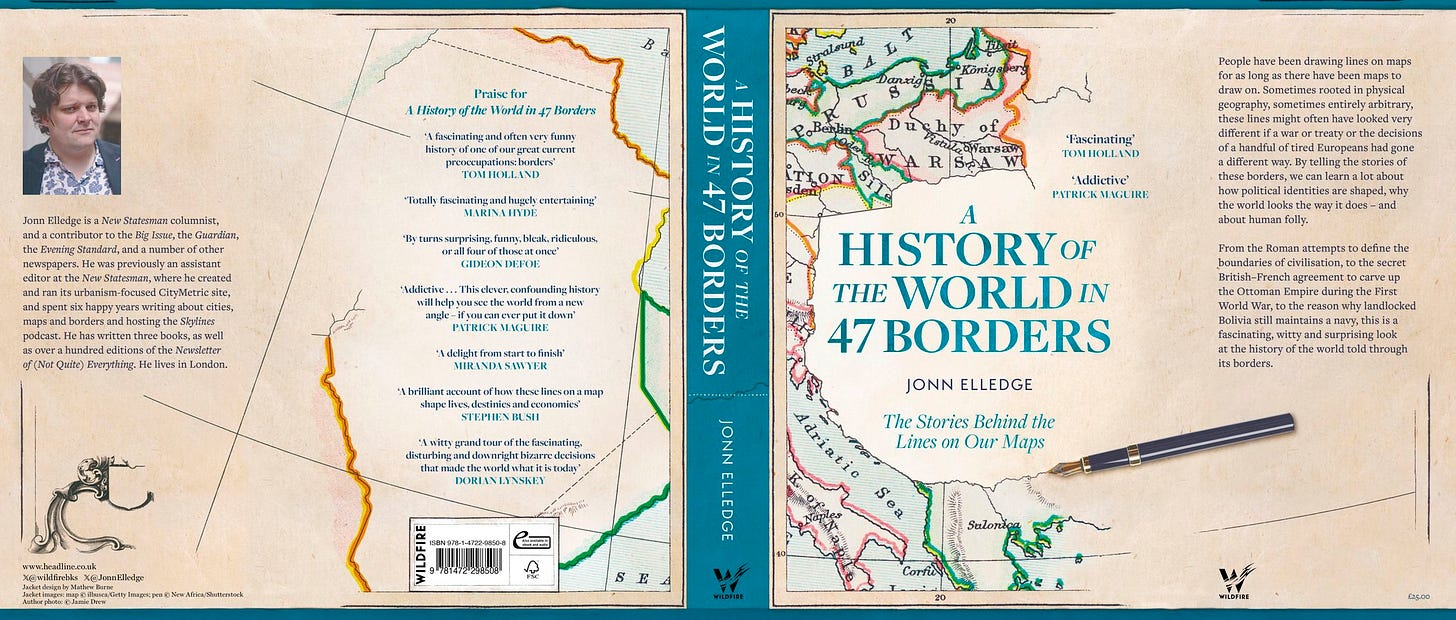

Talking of books, my new one is out in precisely 40 days! Here’s the final cover:

If you are planning on buying it, please do consider ordering it now. Pre-orders are so vital in getting bookshops to pay attention that, if just a fraction of the people on this mailing list order today, it could genuinely change my life.

Also – if you have a book that might appeal to Newsletter of (Not Quite) Everything readers, and would like to alert them to its existence, then why not hit reply and tell me about it...?

Am I right in thinking was also the first UK election with an exit poll (which got it right)?

Could each reported poll result have influenced the succeeding poll or were they too close together in time to affect each other?